Finding Bottlenecks In ASP.NET Core With Jaeger

Published on August 21, 2024 by Fabian Stadler

When launching APIs to production, it is crucial that your endpoints are fast and reliable. In your development environment, you might not notice any performance issues. However, once you receive a lot of traffic, some endpoints can turn out as slow and unreliable.

These bottlenecks that often only show during peak usage are difficult to identify. In this article, I will show you how I investigated such a bottleneck in one of my recent projects and how I could reduce RAM and CPU usage considerably.

Performance is key when it comes high traffic APIs, (C)

StockSnap on

Pixabay

Performance is key when it comes high traffic APIs, (C)

StockSnap on

Pixabay

Profiling the Database vs. Profiling the Code

The project I was working on used a set of API containers behind a HAProxy load balancer. The API in question was based on ASP.NET Core 8 and had endpoints that were used to create inventory data including images and product information. Running inside a Docker cluster, the memory and CPU usage was limited, with an average usage of about 200MB per container.

The issue that arose was that during high traffic hours, the memory would spike to more than 1GB, eventually causing the container to crash and requests to be rejected for a few seconds. Because most requests around the time the issue arose only handled database queries, I assumed there was an issue with slow queries and requests piling up.

In such a scenario, you have two choices: Either you profile the database itself. This would be insightful if you wanted to know how fast the queries are in general. However, it does not account for the overhead of the ORM mapping framework, namely Entity Framework Core in this case.

Setting up OpenTelemetry in ASP.NET Core

Therefore, I went with investigating the latter first. For this, you can instrument your code with OpenTelemetry. This is a project that provides telemetry data for effective observability of software performance and behavior. It also supports traces, metrics, logs, and integrations with popular libraries and frameworks across several languages.

To set up OpenTelemetry in ASP.NET Core, I installed the following NuGet packages:

dotnet add package OpenTelemetry.Exporter.Console --version 1.9.0

dotnet add package OpenTelemetry.Exporter.OpenTelemetryProtocol --version 1.9.0

dotnet add package OpenTelemetry.Exporter.Prometheus.AspNetCore --version 1.9.0-beta.2

dotnet add package OpenTelemetry.Extensions.Hosting --version 1.9.0

dotnet add package OpenTelemetry.Instrumentation.AspNetCore --version 1.9.0

Afteward, I had to set up OpenTelemetry in my ASP.NET Core application. This

can be done in the Program.cs file as following. As you can see, I made the

service name and version configurable via environment variables (or the

appsettings.json file). You furthermore need to specify an endpoint of an

OpenTelemetry collector. But more on that later.

var tracingOtlpEndpoint = Configuration["OpenTelemetry:EndpointURL"];

var serviceName = Configuration["OpenTelemetry:ServiceName"] ?? throw new InvalidOperationException("OT service name not found.");

var serviceVersion = Configuration["OpenTelemetry:ServiceVersion"] ?? throw new InvalidOperationException("OT service version not found.");

services.AddLogging(builder =>

{

builder.ClearProviders();

builder.AddConsole();

builder.AddOpenTelemetry(options => options

.SetResourceBuilder(ResourceBuilder.CreateDefault().AddService(

serviceName: serviceName,

serviceVersion: serviceVersion))

.AddConsoleExporter()

);

});

services.AddOpenTelemetry()

.ConfigureResource(resource => resource.AddService(

serviceName: serviceName,

serviceVersion: serviceVersion))

.WithTracing(tracing =>

{

tracing.AddSource(serviceName);

tracing.AddSource("Microsoft.EntityFrameworkCore");

tracing.AddAspNetCoreInstrumentation();

tracing.AddOtlpExporter(otlpOptions =>

{

otlpOptions.Endpoint = new Uri(tracingOtlpEndpoint);

});

})

.WithMetrics(metrics => metrics

.AddMeter(serviceName)

.AddMeter("Microsoft.AspNetCore.Hosting")

.AddMeter("Microsoft.AspNetCore.Server.Kestrel")

.AddPrometheusExporter()

);

Once you've added this code, you're almost ready to go. ASP.NET Core will now automatically trace all incoming and outgoing requests to your controllers. Which means you're already able to profile the speed of your endpoints without any further code changes.

Custom traces for more visibility

For the problem specified above, I needed to measure the speed of my database

queries. Therefore, I had to add further tracing to my code. This can be done

by using a Tracer class.

First of all, you need to add a TracerProvider to your code. This can be added

as a singleton right after the OpenTelemetry setup in the Program.cs file.

services.AddSingleton(TracerProvider.Default.GetTracer(serviceName));

With this TracerProvider it is then possible to create Tracers in a controller. Since the provider is now available via dependency injection, you can just add it as private object to your controller.

_efTracer = tracerProvider.GetTracer("Microsoft.EntityFrameworkCore");

Each request will create an own Tracer due to controllers being scoped objects

in ASP.NET Core. By calling the Tracer.StartActivity method, the class will

create a breakpoint that can be later used to identify the start of activities

and their sub activities. One can also add tags from variables for further

information.

using var activity = _efTracer.StartActivity("Database");

activity?.SetTag("limit", limit);

activity?.SetTag("offset", offset);

Here the limit and offset variables are used to identify differences in

the request body. But this is just optional. In general, tags will make it

easier to filter traces by certain variables and to compare them to each

other.

Collecting and analyzing traces with Jaeger

Jaeger is a distributed tracing observability platform, which maps the flow of requests and data as they traverse a distributed system. Not long ago, it had an own backend that you could connect your OpenTelemetry collector to. Now, Jaeger has a full OpenTelemetry collector integrated. The easiest way to connect to it is by setting up Jaeger's all-in-one Docker container with docker-compose.

otel-collector:

image: jaegertracing/all-in-one:latest

ports:

- "4317:4317"

- "4318:4318"

- "6831:6831/udp"

- "8888:8888"

- "8889:8889"

- "16686:16686"

environment:

- COLLECTOR_OTLP_ENABLED=true

In my REST API configuration, I've set the OpenTelemetry endpoint to

http://otel-collector:4317 as my API is running on the same Docker network

as the Jaeger container. If you're running your API on a different network,

you will have to set the endpoint to the IP address of your Jaeger container.

Jaeger comes with a powerful UI to analyze your traces. You can see the duration of your queries, the tags you've added to the activities, and the actual query that was executed. This can be very helpful to identify bottlenecks in your code and to optimize your database queries. If you've instrumented your code and setup Jaeger correctly, you can access it on port 16686.

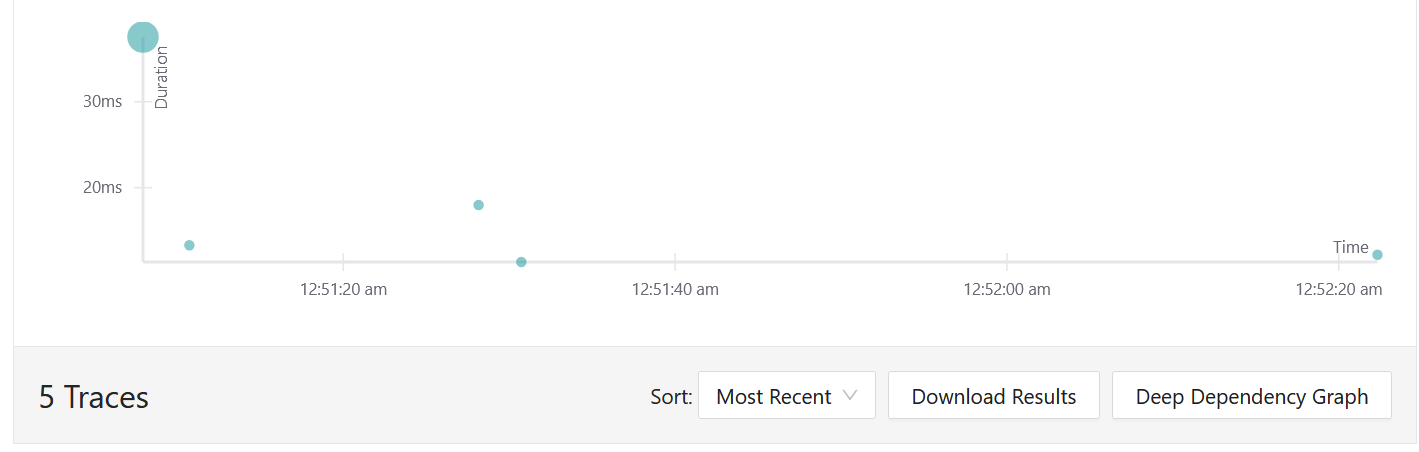

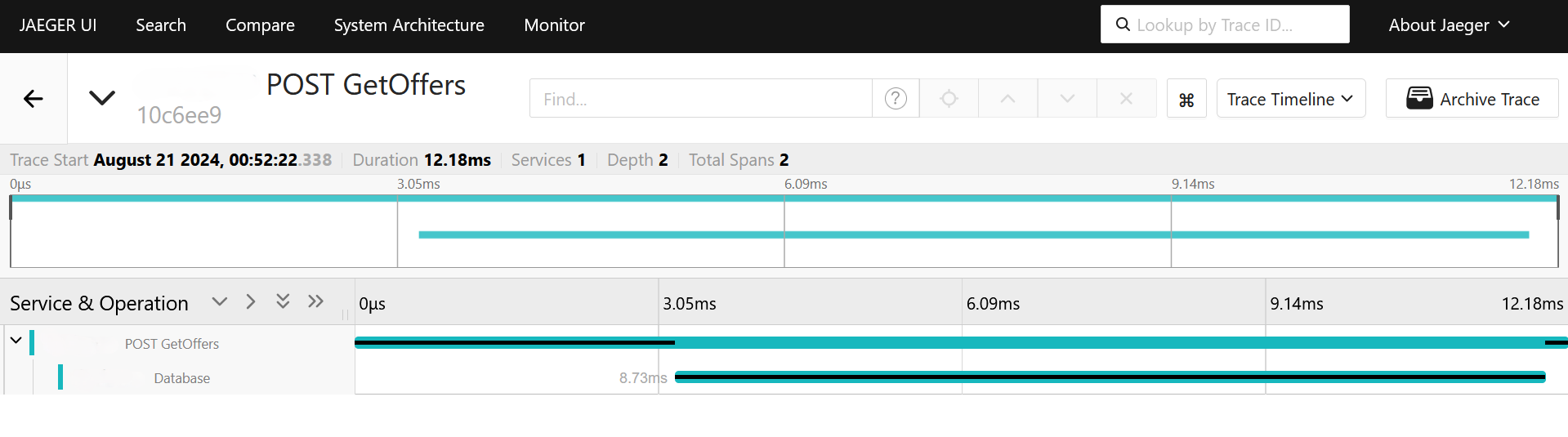

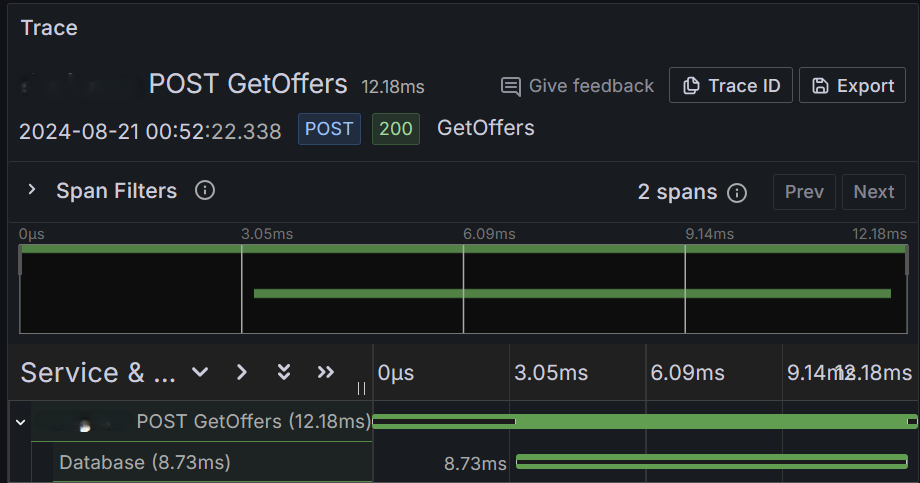

On selecting the service and type of trace you want to analyze, you will see each trace and its runtime in a nice graph. This can be very helpful to identify outliers and to optimize certain endpoints. For more information, you can also click on just any trace and see all the configured traces in a timeline. This shows you the duration of each trace and the tags you've added to the activities.

Bonus: Exporting traces to Grafana

If you don't want to use the Jaeger UI and already use Grafana, you can also export the traces. Just add Jaeger as data source to Grafana and you're good to go. Choose the port of the UI, in my case 16686. Then, you can create dashboards in Grafana to visualize your traces and to get a better overview of your API's performance.

By analyzing this example trace, one can easily see that the database part of the endpoint GetOffers takes roughly 2/3 of the total time. This is okay, considering most of the logic is spent on building and executing the query.

Making bottlenecks visible

In my project, I was able to identify that the query time was actually pretty low in comparison to the time spent on processing image data. This resulted in a spike of CPU usage during high traffic hours. The resulting CPU throttling then caused the memory to spike, eventually causing containers to crash.

By analyzing the traces, I was able to identify the bottleneck and to optimize the code. This resulted in a 90% speed increase and helped to keep the RAM usage on a stable value.

As you can see, OpenTelemetry and Jaeger make it easy to measure the speed of your code. By using the Jaeger UI, you can easily analyze traces and identify outliers. Connecting Jaeger to Grafana, you can even create dashboards to visualize your traces. This will make finding bottlenecks in your code a breeze.

If you have any questions or feedback, feel free to write me a mail or reach out to me on any of my social media channels.